This is the story of Stephen Pizzo’s enthralling preparations to shoot the Solar Eclipse on Monday, August 21, 2017. This is the first total solar eclipse across the continental United States since 1918. The Moon will pass between the Sun and Earth, blocking the face of the Sun and leaving only its outer atmosphere, or corona, visible in the sky. The epicenter of the total eclipse will be in Carbondale, IL. That’s where he is now.

Stephen lives in LA. He worked as a camera assistant, focus puller and later as mechanical design engineer partnering with Hector Ortega to found Element Technica in 2007. They built some of the best accessories for camera systems initially focusing on the new RED One that was coming out at the time. Their line of AC friendly 3D rigs led to the company’s acquisition by 3ality and later by RED.

By Stephen Pizzo

Our project includes a number of firsts that rely on state-of-the-art digital cinema cameras as part of an advanced astro-imaging system built specifically to capture the first US-wide eclipse in a hundred years.

The eclipse on August 21 has been dubbed the Great American Eclipse since the path of totality spans the US from Oregon to South Carolina.

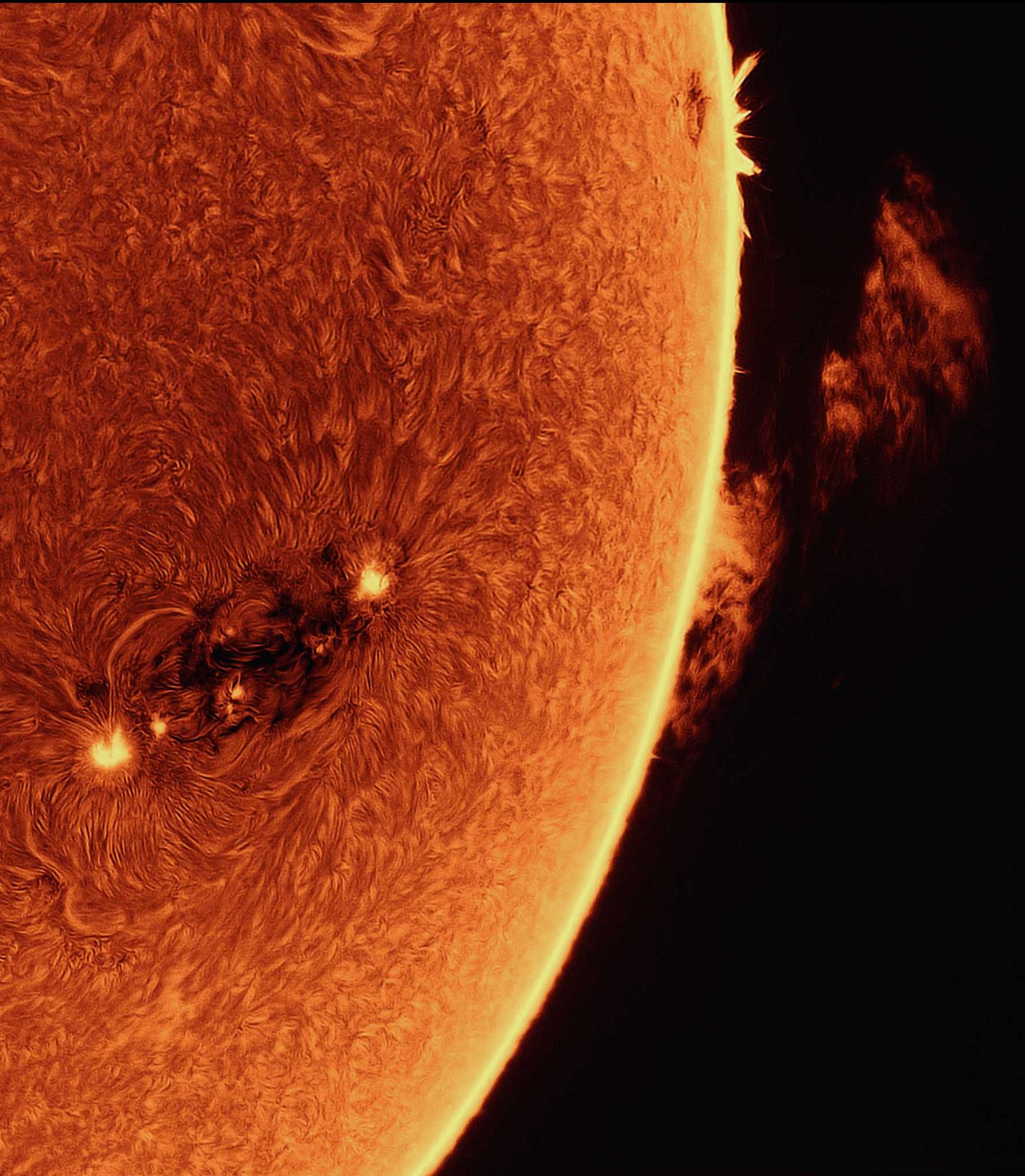

A couple years ago my son and I started playing around with astronomy: nighttime planetary and Deep Space Objects (DSO) as well as daytime/solar. Once we discovered narrow passband optical resonators to observe the chromosphere, it got really interesting since we could actually see the magnetic flux lines of the multiple magnetic fields as the plasma conformed to them. By isolating the specific emission lines, this fascinating world came into view. Unlike nighttime targets, the chromosphere is constantly changing at a scale easily discerned from our vantage point.

As you would expect, I started trying to photograph what I saw using specialized monochrome astronomy cameras. I eventually started playing around with a RED Dragon 6K monochrome and eventually got it to produce decent solar images.

Fast forward to October 2016 when I was invited to present my images and give an overview on the process to representatives of NASA from Johnson, Langley and Goddard. During the meeting they explained their plan to put together a big media event around the eclipse, including a live broadcast from the path of totality on the SIU campus (Southern Illinois Univ.) as well as live feeds of the Sun in 3 different wavelengths.

The other attendee at this meeting was Lunt Solar Systems, a leading manufacturer of solar telescopes and particularly the optical resonators that make narrow passband observation possible. I knew them from our amateur astronomy days. Lunt Solar Systems specialize in dedicated solar telescopes and narrow passband optical components that brought sophisticated, wavelength specific solar observation to university astronomy departments and serious amateurs.

(see narrow passband solar images on Instagram: @longtech.elsewhere )

Within a few weeks we had a plan to build the world’s first mobile solar observatory complete with 20″ heliostat to track the Sun, three monochrome RED Dragon 6K cameras, three sets of telescope optics (focal lengths of 1600mm and 1200mm to get the full disk of Sun in frame) with 230mm and 152mm front element diameters, high magnification telescope optics, a massive optics table and a handful of 24 core dual Xeon workstations networked with a couple of 48TB NAS units. RED is sponsoring the event by providing the monochrome cameras which are actually quite rare.

We will be continuously outputting a standard broadcast-format live video feed from all three cameras while recording the R3D RAW image data simultaneously (6K full frame at 80 fps, best quality is 14:1 compression) shooting 1/1000th sec at ISO 500 to 3200.

The live feeds for broadcast will get some real-time processing, including live color grading and application of 3D LUTs wirelessly with Teradek’s Core COLR . The images then go to NASA in Langley. This is made possible using Teradek’s CUBE encoders to send the HD-SDI images over IP. Teradek is a sponsor of this project. At Langley, graphics are added, rerouted back to Carbondale to the remote truck of NASA Edge for live switching and distribution to various national and regional broadcasters..

In addition to the live feed, we are recording to RED onboard Mini-Mags at 80 fps, doing staggered reloads.

In SunLAB, there’s an enclosed rack with 5 dual-processor servers. Two are for image processing and transcoding. Another is an ingest machine. Two more are for storage. Each with 48 TB. That’s massive data wrangling!

All this will be covered by the networks, NASA Edge/NASA TV, and the Weather Channel.

Recently, the NYTimes quoted Lou Mayo, NASA astrophysicist and project manager of the SUNLab imaging effort; “The images will be recorded by a mobile solar observatory called the SUNlab that was built by Lunt Solar Systems, a telescope company in Tucson. The observatory connects to a heliostat mounted outside, which tracks the Sun and reflects light at the telescopes. The SUNlab will produce ultra-high-definition images under different wavelengths of light.

“If it’s clear, we’re going to have by far the best imaging of the eclipse that anyone is doing,” said Lou Mayo, a planetary scientist at NASA Goddard Space Flight Center and the program manager for the agency’s eclipse planning.

“The images will be shown first on NASA Edge, a four-hour webcast to be streamed live from SIU Saluki Stadium. Its announcers plan to have a solar physicist nearby to explain the plasma activity the crowd may potentially see, like sunspots, solar prominences and coronal mass ejections.

“Dr. Mayo predicts coverage of the eclipse could reach a billion people. But for the millions lucky enough to witness totality in person, like those venturing to Carbondale, he said the experience could be transformative.”

Brook Willard is working with us as camera systems engineer. He’s managing the 3D LUT boxes. The R3D data will be used to generate 18 and 32 MB still images as well as 4K UHD time lapse sequences. The stills will be posted to the various NASA sites and social media as they come out of processing while the time lapse sequences will be sent to NASA’s media team as a 4th feed for their use in the broadcast.

The image processing workflow is pretty fascinating since we have to pull down several hundred frames at the fastest frame rate possible to create a single, highly detailed, high resolution, narrow passband image. After using REDCine-X Pro to generate the TIFF files, the pile of sequential frames gets hit with a type of super res computational imaging algorithm that literally pieces together the best segments of the hundreds of frames to construct a single highly detailed, highly accurate image. This process referred to as stacking generates an image that is rich in information and devoid of noise. The following algorithm reveals the depth of that information through a process call deconvolution. It is this part of the process that is most impressive to watch. Literally before your eyes, finer and finer details become apparent. I have a pretty fast 2-year-old SolidWorks certified workstation I had been using for solar. One finished frame can come from 200-400 images composited together. It took 90-120 minutes to output a single 4K frame and 6K crashed the machine. In other words, that’s about 2 hours for one frame—and 60 hours for one second of screen time. These new workstations are optimized for solar imaging and can crank out a 4K frame in under 100 seconds and 6K in as little as 3 minutes. That’s about 50-60 minutes for a one second sequence that previously took 60 hours.

Since we’ll start the time-lapse processing after the first reload and coupled with the fact we’ll have several computers each working on a different pieces of the sequence, we’ll be able to start handing off a highly detailed UHD time-lapse sequences of the eclipse that cuts back and forth between the 3 wavelengths within 20-30 of the eclipse beginning.

At the beginning of May we did a site visit/survey of the SIU campus. Between the SIU chancellor’s office, their physics department, the Carbondale city government and quite a few NASA personnel, it become obvious that this event was growing in scope and significance. In addition to the hundreds of press requests including documentary crews covering the SUNLab, there is even an IMAX crew from the upcoming IMAX on Albert Einstein. This film plans to use our post processed imagery of totality but they also have camera operators spread out along the path of totality. One of the crews of the film will be recreating the Eddington experiment of 1919 which demonstrated gravitational lensing thereby launching Einstein into international celebrity overnight.

https://www.space.com/37018-solar-eclipse-proved-einstein-relativity-right.html

In my career merging photography and engineering, I’ve worked on a number of projects I consider significant but I am particularly proud of the work we’ve done to get here and of the solar imagery we’re producing. Its really amazing to think that something I was doing purely for fun has evolved into the opportunity to image such a rare event for a global audience. Here’s hoping the weather cooperates.

Images courtesy of Stephen Pizzo. Additional photos coming.