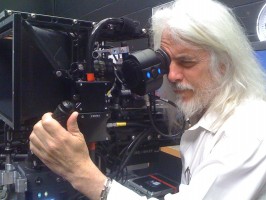

Bob Richardson, ASC received an Academy Award for Best Cinematography on Hugo. He previously won for JFK and The Aviator.

It was the first award of the evening–appropriate because Hugo was a beautifully shot (in 3D), masterfully directed, a compelling and complex story brought to screen with many innovations. It was the first 3D picture for Richardson and Scorcese, the first major motion picture for ARRI Alexa digital cameras and the first to use all three sets of Cooke prime lenses: 5/i, S4/i and Panchro/i.

We interviewed Bob Richardson and many members of the crew in our February 2012 issue of Film and Digital Times. Here is the text of Bob’s comments on Hugo.

Jon Fauer: I first saw Hugo at the DGA Theatre in L.A. Many in the audience said it was the best 3D they’d ever seen.

Robert B. Richardson, ASC: There is an immediacy to the actors, and a relationship to the actors and the sets. We shot it in 3D, with 3D monitors in front of us. Unlike post-convergence, live 3D is going to become an even better tool in the future. At the present time, I believe, if you’re attempting to do something as an artist, why wouldn’t you use a tool that’s there for you to craft your film, rather than to wait for someone to hand it to you later in post? Today’s schedules don’t allow for 2D to 3D conversion, as you can probably well imagine, with directors editing literally to the day before the release.

I can’t even imagine seeing this motion picture in 2D. I don’t know how to do it. A friend of mine went to see it in 2D the first time. And I said, “What are you doing? Go back and see it in 3D.”

You had a big 3D rig. You were often on a crane. You’re lighting and operating. How do you view the image?

I basically ride most of my shots. I was using the ARRI Alexa eye piece. I was basically looking through only one camera as opposed to two. When you’re shooting and operating in 3D, unless you have a monitor—which I used in a couple situations if they were extremely difficult moves to get to—in general, I would use the 2D image in the eyepiece.

I looked through the fixed camera on the rig. The other camera moved to adjust IO and convergence. But I noticed, even with that, I would frame a shot, and my image would not be exactly what I was framing. I noticed it was shifted slightly left or right.

Demetri Portelli, our stereographer, was pulling IO and convergence, and was working the way a focus puller does essentially. His job was to make it a more comfortable experience in 3D.

Talk about the gorgeous shot in the National Film Library, with the shafts of light streaming through the windows.

Weren’t they stunning? It was natural light. It’s a real location, the Bibliothèque Sainte-Geneviève. We were very fortunate that we had these shafts. We lit outside each window in advance, and then the real sun came streaming in. The shot you’re talking about was, for both Marty and myself, an extraordinarily remarkable moment. I set up two dollies simultaneously, knowing the speed at which the sun was going to come, so we’d get a series of shots when the sun was there. And I set one closer for the medium shot and one further back for a wider shot. And then, on top of that, we had the crane on the left hand side move one, two, three, as rapidly as possible, to keep the sun in its proper place to match.

It was fascinating that, as I was setting the shot up, I looked and I saw what you just described. And it was, for me, almost a spiritual event. Because the light became solid. And it made me think of where we sit in this world. When we think that something is not real and may not exist or matter, it is in fact filled with solid elements, regardless of size.

Marty and I noticed that the beams of light were like solid beams. They almost looked like they were made of wood. You felt the solidity of it, and you knew that you were walking through it, seeing people walk through it. Yet you knew it wasn’t solid. There’s a remarkable transformation that takes place in your brain. That, for me, was one of the major moments of the 3D, in terms of something I hadn’t seen to that point.

The 3D enhances that, I guess. But the 2D can’t capture the weight. Well, it’s two dimensions versus three dimensions. You are feeling the sides of the light source. And it’s giving these—you said like pillars of light—it’s giving the light beams mass. In 2D, it’s just a shaft. It doesn’t have mass. That’s where the 3D is phenomenal, in terms of how it transforms the emotion for me.

In the bookshop scene, when the kids first walk in, it’s just a simple 3-shot from the floor looking up. But the entire book store had dimension and weight. You could feel the weight of the books. I could feel the weight and how powerful this place was in terms of Isabelle’s life. And for her to bring Hugo there was just remarkable when you see these small things that, in an ordinary shot, would have meant so little. Rob Legato did the visual effects to work with depth in 3D. The influence in 2D cannot be the same. The quality and the mystery is different. What he created was a way to give you depth, and knowing he had 3D, how it would work, and when you could fool or not fool.

What about the look of the film and Lumière autochromes?

We began early with a series of screenings at the BFI (British Film Institute) and with Marty’s selections. The Autochromes were a principal place for leaping off. But we also looked at early films that were tinted, toned and also hand-colored, including The Great Train Robbery, Nosferatu, and the work of Méliès. You can sense that in some of the tinting and toning in the flashbacks, with Hugo’s father. Now, when Hugo was not with him, we did sort of a tint of a blue with a toning of an amber. And particularly, in night time scenes prior to the fire coming up the stairs.

(Autochrome is an early color photography process, patented in 1903 by the Lumière brothers in France. It was an additive technique and the major color photography process before subtractive color film was introduced in the mid-1930s.)

Autochrome became the basis by which we looked at that time period. A look up table was created. It’s not a totally accurate mirror of Autochrome, but it is something that we felt closely resembled it, and close enough to give us the impression that we could work in degrees from it. For example, the majority of the material might be working at 50 or 60 percent of the Autochrome. But the flashbacks, where you see Méliès with his wife, those were as high as 150 percent of the Autochrome.

Tell me about dailies, post and DI…

We had a timing suite at Shepperton. It was a small DI room where we timed all of our dailies. Greg Fisher was the timer here and he took it all the way through the finished film.

This took away the question marks. The thing is, it’s vital for a director to see this, especially when you’re working with something pretty new. We went into the timing suite every day. Financially, we had the projection room anyway. So we had the room set up. All we were really adding was an individual to do the timing. We were not hiring another company to do the work to finish it. We did it on a Baselight.

That all worked quite well for me. I was able to work digitally with Greg and keep in the loop. Marty could see the dailies and give feedback on what he felt would work better or what could be improved. That became a faster way of finding what the look of the movie was, so there was less necessity at the tail end to refine, because we were already well within the ballpark.

You had a luminous golden color in many scenes. How did you achieve that?

With the aid of a look up table, I lit the Méliès apartment with only tungsten lights. In other scenes, I would have cool overheads, as if the daylight were coming in. And then I would add various colors on the ground, depending whether it was going to be white or warmer than white.

For Hugo’s apartment in the station, there was a combination of lights. We put gels on the units to gave it the look. We used blue top light, blue beams, with white light on the bottom that was down on the dimmer about 40 percent. I would change my color temperature directly on the Alexa camera, depending upon the amount we were searching for. So you might be looking at something that was shot at 3200 or 4500 or even 2300. It would depend on which scene.

What were you using in the station, where you have a lot of big areas and really strong backlight?

Those were all Dinos or 20Ks. In most cases, that light was full intensity 100 percent, but I would gel. I might gel them ½ blue, or ½ straw, depending on what I was looking for, late afternoon, or if I wanted to use a cooler light. The colors were very different from what we’ve experienced in the past. With film, you would add a filter.

How did you rate the ARRI Alexa cameras?

Alexa is an 800 ASA camera. But essentially we were shooting at the equivalent of 400 ASA because the mirror took away one stop.

Is that why you went with Cooke 5/i (T1.4) lenses?

I gravitated toward the notion of starting with the very best. We can fully remove quality later. But it’s virtually impossible to add quality back once it’s gone.

But, we went with Cooke 5/i primes for another reason: to use the metadata. We were pulling /i data from the lenses. It was early on, but we said we should try this because was available to us. Anything that helped the visual effects, we did.

Some of the shots had a gorgeous halation. Like when Hugo is backlit, and there are glints on the hot spots…

Part of that was contributed from the digital intermediate where we put in a very light diffusion to create that, and blend it in. If you watch the outside edges of a number of those images, you’ll see vignetting. In that vignetting, you’ll see diffusion in various degrees. Sometimes it enhanced. Hopefully you didn’t notice it. That’s a good thing. But it does bring your eye in.

We would also use a small digital vignette around most images that were dark—around the whole image. It would vary between 10 percent, 20 percent.

When I blow somebody out with a strong backlight, that’s a natural halation. It’s going through the lens and the mirror.

You could see that not only on Hugo, but when I shot the Station Inspector from Hugo’s side of the jail cell: he was lit with hard backlight and that halation is just the natural property of the lens. They’re in combination.

That was a learning curve. At first, as I was learning how to shoot 3D, there were a lot of yeses and nos. Don’t use backlight. Don’t go above a certain lens. A strong backlight off a white surface can cause a level of pain when you try to blend two eyes.

When you can’t settle them properly you find yourself using wax or something to take that sheen down, or cutting it when you see that it is too much of a problem. Sometimes it causes ghosting as well, which is pulled out in post. All 3D films have sort of that similar problem.

But, in the long run, you didn’t worry about the backlight?

No. In the long run, the decision was to have 3D move to me, not me move to 3D. Certainly taking into account the limitations or the issues that everyone was bringing up, we determined what was truly an issue, rather than acting out of fear. We said, “Let’s shoot this movie the way the way we want. And then, let’s make a decision if we’re finding that it’s uncomfortable.”

One excellent thing about 3D is that it does not let you miss. If it’s uncomfortable, you know it’s uncomfortable, and there’s something bothering you. It’s immediate. You’ll want to react, unless you’re fatigued. We sometimes missed things due to a level of fatigue. But there were enough of us watching it that someone would catch it, generally Demetri, our stereographer.

And they’re watching it on what?

We all had JVC (GD-463D10 46-inch 3D LCD) HD monitors. They were all consumer monitors. We did the best we could to keep them consistent. Greg Fisher calibrated them. They were checked regularly. Sometimes, Marty would ask, “Well, why does it look like this?” Because we were now looking at an almost finished product. For a director, the quality of output in HD is superb. It isn’t flickering. We’ve all been accustomed for a long time to flickering on video assist monitors when shooting with film. The quality of motion picture film standard definition video playback has generally been quite miserable for most people. ARRI has made strong headway with their HD video assist. And now with the Alexa or other cameras that are being used, you have extremely precise images.

Talk a little more about the look of Hugo.

One of my decisions, at the very beginning, was this: I was not going to shoot the Alexa to make it look like film. I did not want to use the film look up table. I wanted to work with the Alexa as Alexa. What its strengths were, its merits, what its weaknesses were, that was what I wanted to incorporate into this project. If its color space was here, I was going to use that color. If it could give me these types of colors, I was going there.

I decided right away, looking on the Baselight, not to use the look up tables that told me I was shooting on film, you know, on 5248 or ‘93. I don’t want any of this. I said, “Let’s stop now. I don’t want to have a film look up table that emulates film. We’re doing digital cinema. We’re shooting a digital cinema production.” And people were nervous. They were saying, “Oh, but we have 2D releases, releases on film.” But Laser Pacific did an astounding job showing us that we were on the right track.

You did some very intricate close-ups of the clocks and the automatons in 3D…

Those were all done with long lenses: very tight, macro work on the gears. We ended up using 135 mm close-focus Cooke S4/i lenses. Sometimes we added a diopter on them. It took a bit of work to find the right combination. What’s complicated in doing close-up shots in 3D is getting two lenses to match exactly, and coordinating the focus and everything else. So, when we had those tight shots, generally it’d be a 135 mm lens or something like a 135 with a +½ diopter or +1 at most.

Because you were doing moves, your assistant was pulling focus the whole time. It was also an incredible job.

Yes. Gregor Tavenner is a remarkable focus puller. Not all those shots had diopters on them. Some of those shots were moving down following the hands, or were very small moves. But, what Gregor does is he generally will anticipate focus ahead of time.

When he sets focus he anticipates. You know, everyone works off of a remote focus unit now, pretty much. He marks the lenses so that when he adds a +½ diopter, for example, he already has focus discs pre-calibrated for the remote focus device. He’d just put the focus disc on for the +½ diopter. And when we went to the +1 diopter it would be a different ring. We had to experiment to see how close we wanted to go in 3D, which is sometimes just hard to do. Marty would make an alteration in the shot to accommodate the close focus.

What I was going to say about camera assistants is that many of them are now working off of HD monitors to see focus. The difficulty with that is, number one, you don’t always have a monitor, for example if you’re doing Steadicam, or more complicated moving shots, where you have to actually ride or be a part of the system.

If they rely too much on the monitor, they may lose their eye for distance. I worry that they may lose some of their art or skill. What happens if you shoot on film next and you don’t have HD video assist? Not every show is going to be on an Alexa. Plus, the reaction time can be a little bit delayed if they’re looking at a monitor, because by then it could be too late. Of course, one of the values of the monitor is that if there’s a little buzz, they can see it.

What about you, looking through an electronic viewfinder?

I find that looking through an electronic viewfinder is complicated. I can’t really see focus as clearly as before. But, more than that, what did take place was that I was quite worried at the very beginning, would I be able to light?

Would I be able to light through its small television eye piece? Because the electronic viewfinder is really a small eye piece that’s a television. So, what I began to realize is that when I was doing initial tests for darkness and sensitivity I would have a camera with me, an Arriflex 435, a regular ARRI film camera body. And I used the same lens. I put it up next to the monitor on the dolly. And I trained myself.

I would look to see if I were seeing the same thing through both cameras. At a certain point, I stopped. I didn’t need the film camera anymore. I just worked off of the Alexa. In combination with 3D and being able to go off the monitor, I was also seeing things I had never seen before. And so, that changed my perception on how to light.

Hugo is a story and a film that works on many levels.

What we need to recognize is that this movie is for kids. Kids can experience this movie, which is a tale of an older time, and enjoy the older times. They’re watching older films within the film. I love when kids come out and they are mesmerized that it’s the early works of a pioneer from the turn of the last century. We have a hard enough time getting kids to watch films from the ‘70s. How do we get people into movies and to love them?

But also the tale is so brilliant. It works on all levels. Marty did a fantastic job by turning some of the early Méliès works into 3D at that final premiere.

photo above: Todd Wawrychuk © A.M.P.A.S.